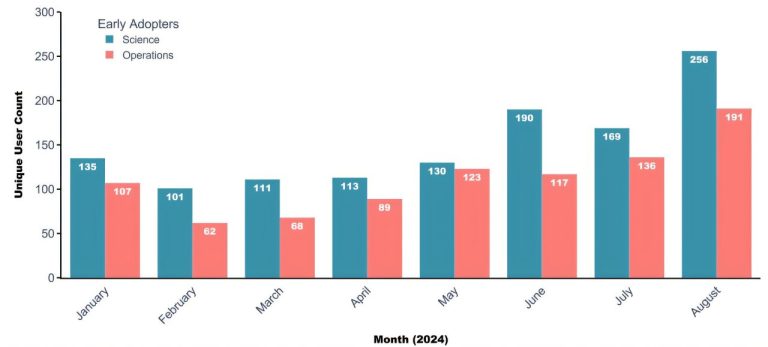

Argo uses measures by operations and unique science users for each month since the initial deployment. Monthly use is less than 10% of all laboratory employees. Note: This route does not capture the use of external LLM and is based on telemetry data collected by automatically. Credit: arxiv (2025). DOI: 10.48550 / Arxiv. 2001.16577

The researchers studied the use by Argonne d’Argo employees, an internal generative artificial intelligence chatbot.

Generative artificial intelligence (AI) becomes an increasingly powerful tool in the workplace. In scientific organizations such as national laboratories, its use has the potential to accelerate scientific discovery in critical areas.

But with new tools, new questions. How can scientific organizations implement a generative AI in a responsible manner? And how do employees in different roles use generative AI in their daily work?

A recent study by the University of Chicago and the National Argonne Laboratory of the American Energy Department provides one of the first exams in the real world of the way in which the generative tools of AI – language models (LLM) in particular large (LLM) – are used in a national laboratory.

The study not only underlines the potential of AI to improve productivity, but also underlines the need for thoughtful integration to respond to concerns in fields such as privacy, security and transparency. The paper is published on arxiv pre -printed server.

Thanks to surveys and interviews, researchers have studied how Argonne employees are already using LLM – and how they plan to use them in the future – to generate content and automate workflows. The study also followed the early adoption of Argo, the internal LLM interface of the laboratory published in 2024. Based on their analysis, researchers recommend that organizations can support the effective use of generative AI while responding to the associated risks.

On April 26, the team presented its results at the Conference of the 2025 IT association on human factors on computer systems in Japan.

Argonne and Argo – A case study

The organizational structure of Argonne associated with the appropriate Liberation of Argo has made the laboratory an ideal environment for the study. Its workforce includes both science and engineering workers as well as operations workers in fields such as human resources, facilities and finances.

“Science is an area where human-machine collaboration can lead to important breakthroughs for society,” said Kelly Wagman, doctorate. IT student at the University of Chicago and the main study of the study. “Science and operations workers are crucial for the success of a laboratory, we therefore wanted to explore how each group engages with AI and where their needs align and divergent.”

While the study has focused on a national laboratory, some of the results can extend to other organizations such as universities, law firms and banks, which have various users and similar cybersecurity challenges.

Argonne employees work regularly with sensitive dataIncluding unpublished scientific results, controlled unclassified documents and proprietary information. In 2024, the laboratory launched Argo, which gave employees secure access to LLM from OpenAi via an internal interface. Argo did not store or share user data, making it a more secure alternative to Chatgpt and other commercial tools.

Argo was the first internal generative interface interface to be deployed in a national laboratory. For several months after the launch of Argo, the researchers followed the way it was used in different parts of the laboratory. The analysis revealed a small but increasing user base of scientific workers and operations.

“AI generative technology is new and evolves rapid, so it is difficult to anticipate exactly how people will integrate it into their work until they start to use it. This study has provided precious comments that inform the next iterations of Argo development,” said software engineer Argonne Matthew Dearing, whose team develops tools to support the mission of the laboratory.

Dearing, which is organizing a joint appointment in Uchicago, collaborated in the study with Wagman and Marshini Chetty, IT professor and head of the Amyoli Internet Research Laboratory at the University.

Collaboration and automation with AI

Researchers found that employees used a generative AI in two main ways: as a co -pilot and as a workflow agent. As a co-pilot, the AI operates alongside the user, helping with tasks such as writing code, structuring text or refining the tone of an email. For the most part, employees currently stick to tasks where they can easily check the work of AI. In the future, employees said they imagined using co -pilotes to extract information from large quantities of text, such as scientific literature or survey data.

As an agent of workflow, AI is used to automate complex tasks, which it performs mainly by itself. About a quarter of the open investigation responses – have spread uniformly between operations and scientific workers – the automation of the workflow aroused, but the types of workflow differ between the two groups. For example, the workers of the operation used AI to automate processes such as the search for databases or monitoring projects. Scientists have declared the automation of workflows for the processing, analysis and visualization of data.

“Science often involves very tailor -made work flows with many stages. People find that with LLM, they can create glue to connect these processes,” said Wagman. “This is only the start of automated workflows more complicated for science.”

Widen the possibilities while attenuating the risks

Although generative AI has exciting opportunities, researchers also highlight the importance of the thoughtful integration of these tools to manage organizational risks and respond to employee concerns.

The study revealed that employees were considerably concerned about the generative reliability of AI and its tendency to hallucinate. Other concerns included confidentiality and data security, overtaking AI, potential impacts on hiring and implications for scientific editing and quotation.

To promote the appropriate use of generative AI, researchers recommend that organizations proactively manage security risks, establish clear policies and offer employee training.

“Without clear guidelines, there will be a lot of variability in what people think they are acceptable,” said Chetty. “Organizations can also reduce security risks by helping people understand what is going on with their data when they use internal and external tools – which can access the data? What does the tool do?”

In Argonne, nearly 1,600 employees attended the training sessions generating Laboratory AI. These sessions have employees in Argo and a generative AI and provide advice for appropriate use.

“We knew that if people were going to get comfortable with Argo, that was not going to happen alone,” said Dearing. “Argonne opens the way by providing generative AI tools and shaping how they are responsible for national laboratories.”

More information:

Kelly B. Wagman et al, generative AI used and risks for knowledge workers in a scientific organization, arxiv (2025). DOI: 10.48550 / Arxiv. 2001.16577

Supplied by

Argonne National Laboratory

Quote: Study explores how workers use major language models and what it means for scientific organizations (2025, April 29) Recovered on April 29, 2025 from https://techxplore.com/news/2025-04-04-explores-workers-large-language-science.html

This document is subject to copyright. In addition to any fair program for private or research purposes, no part can be reproduced without written authorization. The content is provided only for information purposes.