In the spirit of a technology developed by the Société d’Ia Anthropic, Microsoft sees the future of AI where there are many different systems, created by many different companies, all working together, in peace and in harmony. Or to put it in the same words as Microsoft has used, create an “agentic web”.

It is according to a report of Reuterswho relayed the views of Microsoft’s technology director, Kevin ScottBefore the annual of the software company Construction conference. What Scott hopes to achieve is that Microsoft AI agents work happily with those of other companies, via a standard platform called Model context protocol (MCP).

This is an open source standard, created by Anthropic – an AI company which is only four years old. The idea behind it is that it facilitates access to AI systems to access and share for training purposes, and with regard to the specific field of AI agents, this should help them work better in their tasks.

Basically, AI agents are a type of artificial intelligence system that perform a very specific task, such as research via the code for a certain bug, then correct it. They execute independently, analyzing the data and then made a decision based on the rules established during the formation of the AI. The agentic AI, to use the proper name for all this, has a wide range of potential applications, such as cybersecurity and customer support, but it is as good as the data on which it has been formed.

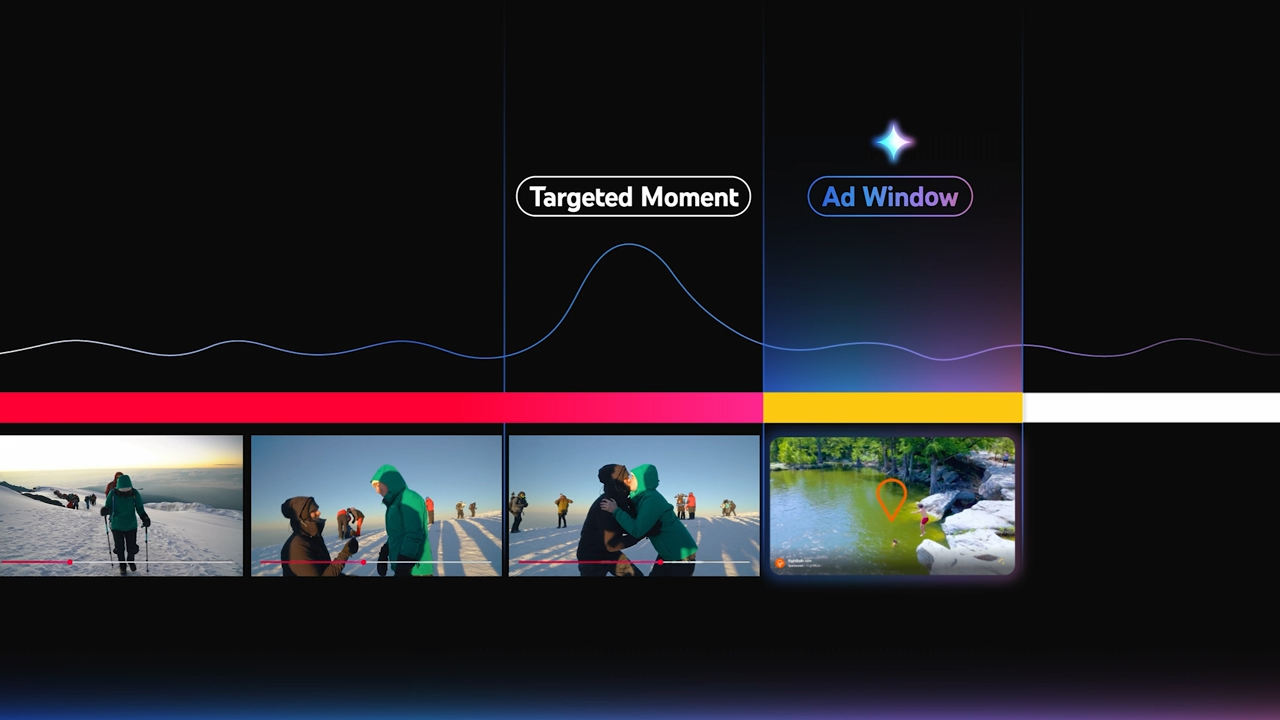

Enter the stage on the left, MCP, which essentially allows AI agents working hand in hand (or should it be tensor in tensor?) With other agents to improve the accuracy of their results. According to Reuters, Scott pointed out that “MCP has the potential to create an” agentic web “similar to the way in which hypertext protocols helped broadcast the Internet in the 1990s.”

It is not only a question of data training, because the precision of the agentic AI depends strongly on something called the learning of strengthening. Similar to the way in which “rewards” and “punishment” affect animal behavior, learning to strengthen AI agents to focus on optimizing their results according to the most important reward.

The fact that AI agents share what works and what would certainly not be useful in learning to strengthen, but that does not raise the question of what is happening if the agents are simply left to their own devices. Will we simply assume that the network of agents will never accidentally choose a negative strategy in relation to a positive strategy? What mechanisms should be created to prevent an “agentic web” of spirally in a negative feedback loop?

A better brain than mine will surely have raised the same questions now and, hopefully, developed systems to prevent all this from happening.

In the same way that certain actions and actions are automatically sold and purchased by computers, without any human interaction, we could approach from the point where many aspects of our life are decided for us by a huge network of interconnected AI agents.

For example, customer support services for banks, emergency services and other vital systems may well be fully agent of AI in about a decade.

I am not competent enough on the AI to judge significantly if it is a very good thing or a very bad, but my intestinal feelings suggest that the reality of the situation will end up being somewhere between the two extremes. Let’s just hope that it is much closer to the first than the second, yes?