Since the organization of research of artificial intelligence (AI) Openai launched its Chatbot AI Chatgpt generator in November 2022, the AI was considered a supreme disruptive technology which will soon fundamentally transform our lives – for good or for evil. Some believe that the impact of AI will be similar to that of the industrial revolution (1760-1840); Others consider him a “superintelligent” agent who could become a thug and potentially resume humanity.

Is it likely? No, for example, IA researchers from Princeton University, who have argued that it would take decades – not years – so that IA transform the company in the way large laboratories and companies of AI have planned. Here is why.

Why do you think AI is actually a “normal” technology – the transformative economic and societal impacts that will be slow?

Arvind Narayanan: AI being a “normal” technology is the Common sense view. We hear about supposedly pierced with AI every day, but how much is really real? And even if technology is progressing rapidly, our ability to use it in a productive way is limited because there is a learning curve. If anything, the stories about adoption quickly are even more exaggerated than the stories on the breakthroughs of the AI.

When we break down the curtain, AI is not so different from other technologies – as the Internet. This does not mean that it will not be a transformer. But if it is transformer, it will happen for decades, not months or years.

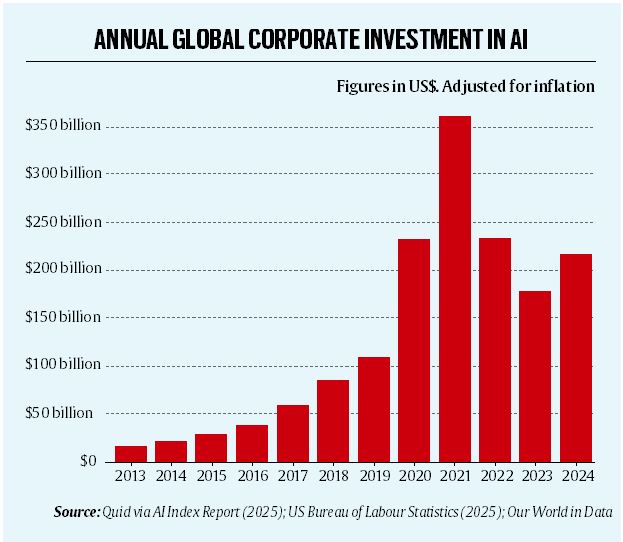

World annual investment of companies in AI.

World annual investment of companies in AI.

But many have argued that AI is different from any other technology in the past, and that in the future, its influence will be non -linear.

Sayash Kapoor: We do not think that AI is different from other technologies spent in its models of technological development and societal impact. We have tried to describe a vision of the future where AI is neither utopian nor dystopian, and we can learn from the way in which previous general technologies have had an impact on the world.

The story continues below this announcement

For example, many IA control scenarios assume that AI systems would gain enormous power without first proving reliability in less substantial contexts. In our opinion, this contradicts the way organizations really adopt technology. We believe that there are many reasons why companies can ensure that humans can control the AI systems they adopt.

The deployment of technologies like autonomous cars also shows this model – security leaders like Waymo (a subsidiary of alphabet, GoogleThe parent company, which provides driver -free services) survived, while the latecomers such as Cruise failed. (In December 2024, General Motors, who held 90% of Cruise, said that this would cease to finance the driver -free Robotaxi service.)

We expect poorly controlled AI not logical, and political interventions can strengthen incentives to ensure human control.

Your “AI as a normal technology” trial maintains that the impact of AI will be slow, based on something called “the innovation-diffusion feedback loop”. What does that mean?

The story continues below this announcement

A: For a large part of the last decade, the generator has quickly improved because companies have trained them using increasingly large data sets on the Internet. This time is now over. AI has learned more or less everything it can learn from the Internet.

In the future, AI will have to learn by interacting with people, having experience in the real world and being deployed in real companies and organizations, because these organizations are based on a ton of so -called tacit knowledge that is not written anywhere.

In other words, as AI becomes more capable, people will gradually adopt it and people adopt it more, AI developers will have a more real experience to use IA capacities. It is the innovation-diaiffus feedback loop. But as it involves a change in human behavior, we predict that it will happen slowly.

A common fear is that if AI’s abilities continue to improve indefinitely, AI could soon make human work redundant. Why don’t you agree?

The story continues below this announcement

SK: If we examine how previous general technologies such as electricity and the Internet have been adopted, they have taken decades before the raw capabilities were translated into economic impact. Indeed, the distribution process – when companies and governments adopt technologies for general use – take place slowly, over the decades.

As this process takes place, the progress and adoption of AI would be uneven. Automated tasks would quickly become cheaper and lose value, and human work would move to parts not affected by automation. In addition, human control would be an essential element of many jobs, which would imply human surveillance of automated systems.

Why do you think that it is necessary to focus on the risks posed by the AI that come from the deployment phase (when AI models are used for certain tasks) rather than the development phase (when AI models are formed)?

SK: It is not enough to develop models of larger or better AI to achieve their impact – the societal impact of AI is carried out when this technology is adopted in the productive sectors of the economy. This is a crucial intervention point for the advantages and risks of AI.

The story continues below this announcement

To carry out the advantages of AI, political interventions must be much more focused on adoption, for example by forming labor or establishing clear standards to obtain AI tools.

Likewise, to respond to the risks of AI, it is not enough to align the AI models with human values. We must respond to the concerns of reliability, for example by developing failures in the event of dysfunction. These cannot be discussed at the development stage only; Different deployment environments would require different defenses.

In March 2023, several experts in technology and managers (including Elon Musk, Steve Wozniak, Andrew Yang and Rachel Bronson) called for a temporary break on the development of AI systems because of the risks they could pose to the company. Will such interventions work?

A: Suppose governments are trying to defend themselves against the risks of AI by trying to prevent terrorists or other opponents from accessing AI. This is what will happen.

The story continues below this announcement

They will have to take draconian measures and slow down the digital freedoms of people to ensure that no one can train and publish a downloadable AI system on the Open Web. This makes society less democratic, and therefore less resilient.

It won’t even work. At one point, these non-proliferation attempts will fail, as the cost of creating powerful AI systems continues to drop quickly. And when they fail, we will face these risks suddenly and have some defenses against them.

On the other hand, if we follow a “resilience” approach (which implies preventing the concentration of power and resources), it in fact promotes the availability of open models and systems, leading to a gradual increase in risks – and we can also gradually increase our defenses in proportion. Essentially, we can build an “immune system”. As with diseases, immunity is a more resilient approach than suppression.

Arvind Narayanan is director of the Center for Information Technology Policy, Princeton University. Sayash Kapoor is a doctoral candidate in computer science to the policy of the Center for Information Technology. They are authors of the test “AI as a normal technology: an alternative to the vision of AI as potential superintelligence”, published in April 2025, and the AI Snake Oil book: what artificial intelligence can do, what it cannot and how to make the difference (2024).

They wrote to Alind Chauhan by e-mail. Edited extracts.